What the New York City Fire Epidemic Can Teach Today's Analysts: A Review of the "The Fires"

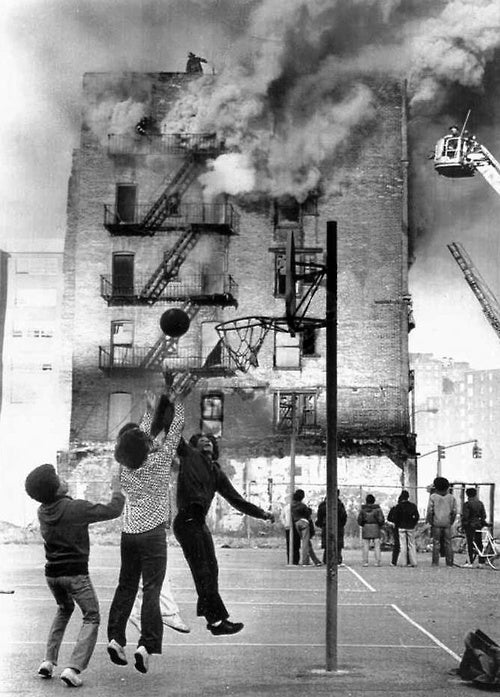

In 1977, The New York Yankees faced off against the Los Angeles Dodgers in the World Series. Before one of the games, a fire broke out near the stadium. Audiences seem to remember the announcer, Howard Cosell saying "There it is, ladies and gentleman, the Bronx is burning" as the Yankees lost and camera panned upwards to show the lingering smoke. This phrase captured the essence of the game, and more importantly epidemic of fires in the city. It even became the title of a ESPN television show about the World Series. Never-mind that the actual footage of the game doesn't include this phrase.

Though the quote may be apocryphal, there truly was an epidemic of fires throughout New York City in the 1970s. I was turned onto a fascinating history of these fires, listening to What's The Point, a podcast from fivethirtyeight. This podcast contained a conversation with Joe Flood, author of The Fires How a Computer Formula, Big Ideas, and the Best of Intentions Burn Down New York City-- and Determined the Future of Cities.

This history, in part, involves data, complex analyses, and the RAND Corporation. This of course, was fascinating to me, as I went graduate school at the Pardee RAND Graduate School (for all practical purposes, part of RAND), so I read Flood's book. Flood's story of the fires reminded me of some prescient lessons, that in some form are relevant to many analysts and data scientists to.

The History

Flood's book details the history of the 1970s fire epidemic (he also summarizes it nicely in the podcast). In this post, I will provide a relatively brief summary, but check out either the podcast or book to learn more. I will also state upfront that the history is probably somewhat in question; RAND may not tell the story the same way Flood did. After I summarize the history roughly as Flood describes it (with some of my own knowledge mixed in), I will talk about the complexity a little bit more.

The RAND Corporation is a non-profit public policy think tank, that spun off from the Air Force after World War Two. Many of the early researchers specialized in a field of applied mathematics called Operation Research. In this discipline, practitioners use data and mathematical models to identify business or policy choices that will optimize some decision. Mathematically speaking, the decision is represented some function subject to a set of constraints: a model. Such models are frequently built from mixture of basic economic theory, some technical knowledge about the subject at hand, and data. In the 1970s, RAND opened a branch in New York City to focus on urban issues. One of their primary, high profile projects, was a model to optimize the placement of fire stations.

This research coincided with many other events in New York City. The city faced severe budget deficits, which may have led to fewer inspections to ensure that buildings met fire codes. A program of slum removal, enacted by Robert Moses, left certain neighborhoods abandoned and ripe to burn. A new Fire Marshall, John O'Hagan, was appointed. He took an interest in new techniques to fight and prevent fires, but was primarily focussed on large new sky-scrapers. O'Hagan was also obsessed with increasing the efficiency of the fire department. Finally, there was a growing trend of individuals burning their own buildings for an insurance payout (but it is unclear exactly when arson began spiking).

O'Hagan and Mayor Lindsay worked with researchers at the New York RAND institute, to identify the optimal placement of fire stations. This tool was used to identify stations that could be closed with minimal impact (at least according to Flood)

Flood also describes something I found fascinating: fires can be thought of an observable indicator of rising poverty. In areas with high poverty, buildings are less likely to be up to code. Additionally, drug use rises and residents are more likely to fall asleep smoking cigarettes. Apparently, this was a large cause of fire.

The distribution of fires by poverty level is critical, because of what, at first, seems to be a small detail of the model. The model would group neighborhoods by socio-economic status and ensure that the distribution of fire stations would not shift between groups. That is, the model would never suggest closing of fire station in a wealthy neighborhood to allow opening a new one in a poor neighborhood. In retrospect, this seems like a pretty bad constraint if fires appear in the poorest neighborhoods. As neighborhoods shift, it would make sense to change the distribution of stations.

But, its not a completely ridiculous assumption. The people in wealthy neighborhoods pay more in property taxes. Part of their decision to live in these neighborhoods is the higher level of government services they receive. Individuals with different preferences for consuming government services can choose their town or neighborhood accordingly. For example, families with children often choose to live in more expensive neighborhoods for the better schools. Then sometimes the parents move out of these neighborhoods when they no longer have school-aged children. This is called Tiebout sorting. This assumption in the model ensures residents receive public goods they "purchased." But, it could also be interpreted as kowtowing to the wealthy and prioritizing the safety of those who have a political voice over a greater number of less wealthy constituents.

As all these changes set in during the 1970's, the fire epidemic in New York city began.

Flood's discussion with What's the Point Host, Jody Avergan, he makes it out as if RAND's analysis was the primary cause of the fire epidemic. And his book title puts the research from RAND front and center. But to be honest, I was a little surprised at a relatively minor role RAND and the fire-models played in text of the book. Flood describes a rich narrative, with multiple personalities at play, new policies across the board, and a budget in crisis. Of course, he also emphasizes that RAND's analysis was a large contributing factor.

In an interview with The Atlantic, Flood states that RAND researchers stand by their analyses, and don't believe the models contributed to fire epidemic. I searched, but could not find any direct response to Flood's story.* The best I found was a paper from 1980, responding to some specific, earlier criticisms of their works.

As with many things in this blog, I am probably not qualified to judge the accuracy of the history. I would find it hard to believe that the exact moment RAND was developing fire-models for New York City, a fire epidemic began, yet the two were unrelated. That said, a story that blames it on early data-scientists is a good way to grab some headlines right now. I suspect RAND's research was a contributing factor, but don't know the exact proportion of blame. In fact, thinking of it in terms of proportion of blame may not be accurate at all. Would RAND's fire models had different results if the city wasn't facing a budget crisis? Would they have been hired at all without Lindsay and O'Hagan's biases? New York's political system at the time is probably too complex to fully assign blame. Despite the title of Flood's book, thinking about complexity was actually my major take away from the book.

As I reflected on this work, I pulled some lessons for analysts and data-scientists practicing today.**

The RAND Corporation is a non-profit public policy think tank, that spun off from the Air Force after World War Two. Many of the early researchers specialized in a field of applied mathematics called Operation Research. In this discipline, practitioners use data and mathematical models to identify business or policy choices that will optimize some decision. Mathematically speaking, the decision is represented some function subject to a set of constraints: a model. Such models are frequently built from mixture of basic economic theory, some technical knowledge about the subject at hand, and data. In the 1970s, RAND opened a branch in New York City to focus on urban issues. One of their primary, high profile projects, was a model to optimize the placement of fire stations.

This research coincided with many other events in New York City. The city faced severe budget deficits, which may have led to fewer inspections to ensure that buildings met fire codes. A program of slum removal, enacted by Robert Moses, left certain neighborhoods abandoned and ripe to burn. A new Fire Marshall, John O'Hagan, was appointed. He took an interest in new techniques to fight and prevent fires, but was primarily focussed on large new sky-scrapers. O'Hagan was also obsessed with increasing the efficiency of the fire department. Finally, there was a growing trend of individuals burning their own buildings for an insurance payout (but it is unclear exactly when arson began spiking).

O'Hagan and Mayor Lindsay worked with researchers at the New York RAND institute, to identify the optimal placement of fire stations. This tool was used to identify stations that could be closed with minimal impact (at least according to Flood)

Flood also describes something I found fascinating: fires can be thought of an observable indicator of rising poverty. In areas with high poverty, buildings are less likely to be up to code. Additionally, drug use rises and residents are more likely to fall asleep smoking cigarettes. Apparently, this was a large cause of fire.

The distribution of fires by poverty level is critical, because of what, at first, seems to be a small detail of the model. The model would group neighborhoods by socio-economic status and ensure that the distribution of fire stations would not shift between groups. That is, the model would never suggest closing of fire station in a wealthy neighborhood to allow opening a new one in a poor neighborhood. In retrospect, this seems like a pretty bad constraint if fires appear in the poorest neighborhoods. As neighborhoods shift, it would make sense to change the distribution of stations.

But, its not a completely ridiculous assumption. The people in wealthy neighborhoods pay more in property taxes. Part of their decision to live in these neighborhoods is the higher level of government services they receive. Individuals with different preferences for consuming government services can choose their town or neighborhood accordingly. For example, families with children often choose to live in more expensive neighborhoods for the better schools. Then sometimes the parents move out of these neighborhoods when they no longer have school-aged children. This is called Tiebout sorting. This assumption in the model ensures residents receive public goods they "purchased." But, it could also be interpreted as kowtowing to the wealthy and prioritizing the safety of those who have a political voice over a greater number of less wealthy constituents.

As all these changes set in during the 1970's, the fire epidemic in New York city began.

Flood's discussion with What's the Point Host, Jody Avergan, he makes it out as if RAND's analysis was the primary cause of the fire epidemic. And his book title puts the research from RAND front and center. But to be honest, I was a little surprised at a relatively minor role RAND and the fire-models played in text of the book. Flood describes a rich narrative, with multiple personalities at play, new policies across the board, and a budget in crisis. Of course, he also emphasizes that RAND's analysis was a large contributing factor.

In an interview with The Atlantic, Flood states that RAND researchers stand by their analyses, and don't believe the models contributed to fire epidemic. I searched, but could not find any direct response to Flood's story.* The best I found was a paper from 1980, responding to some specific, earlier criticisms of their works.

As with many things in this blog, I am probably not qualified to judge the accuracy of the history. I would find it hard to believe that the exact moment RAND was developing fire-models for New York City, a fire epidemic began, yet the two were unrelated. That said, a story that blames it on early data-scientists is a good way to grab some headlines right now. I suspect RAND's research was a contributing factor, but don't know the exact proportion of blame. In fact, thinking of it in terms of proportion of blame may not be accurate at all. Would RAND's fire models had different results if the city wasn't facing a budget crisis? Would they have been hired at all without Lindsay and O'Hagan's biases? New York's political system at the time is probably too complex to fully assign blame. Despite the title of Flood's book, thinking about complexity was actually my major take away from the book.

As I reflected on this work, I pulled some lessons for analysts and data-scientists practicing today.**

Lessons

All Models Are Wrong, Some Are Useful. But how can you know?

In what seems like my first day of graduate school, one of our professor, Bart Bennet provided us with some statistical wisdom: "All models are wrong, some are useful".*** I found myself both hearing and repeating this wisdom through the course of my own research, both at RAND and since I have graduated.

Flood's book describes a plethora of ways that the RAND fire models were "wrong." For example, to measure response times, RAND researches provided firemen**** with stopwatches and asked them to track how long it took until they arrived at fires. Years later, firemen told Flood they frequently did not take these measurements seriously. Some firemen believed a slow response time would provide them with more resources. Others were afraid of punishment for slow responses. On both sides, firemen report allowing these biases to influence how they reported data. Another example of a way in which these models were wrong, was that they made a simplifying assumption, that congestion was constant in the city through all hours of the day. This assumption can impact the predictions that models generated for the change in response times, after closing a fire station.

Of course there were ways in which the models were "wrong." It's a model! Just as a model rocket that a kid can launch in his backyard doesn't have all the intricacy and capability of a NASA rocket, but represents some of the relevant details of a true rocket, these models are simplifications of reality. Thats the point. It's a researcher and data-scientist's job to examine which simplifying assumptions can be made. The question a researcher must alway ask when building these is: does the small ways in which they are wrong change larger macro policy implications?

These particular models were held great acclaim, not just from RAND researchers, but from the community at large. They were peer reviewed. They won the Eddleman Prize (it's like the Oscars, but they invite nerds like me). On the other hand, these assumptions could have led serious flaws. One strong criticism of the models that Flood pointed out was the models were designed to optimize for average response time. Fires might be an area where you don't want to plan for the average, but maybe the maximum response time (or some higher threshold, like 95 percent), because the consequences of a sow response can be catastrophic.

These particular models were held great acclaim, not just from RAND researchers, but from the community at large. They were peer reviewed. They won the Eddleman Prize (it's like the Oscars, but they invite nerds like me). On the other hand, these assumptions could have led serious flaws. One strong criticism of the models that Flood pointed out was the models were designed to optimize for average response time. Fires might be an area where you don't want to plan for the average, but maybe the maximum response time (or some higher threshold, like 95 percent), because the consequences of a sow response can be catastrophic.

From the descriptions of the models I received from Flood's book, he shows that some of the details are wrong, but it would be much more research to identify if the conclusions were wrong. The stopwatches were probably far from a perfect way to measure response time. However, even with the biases and inaccuracies, they still may have been providing far more information than was previously available. Here's the big outstanding question: would decisions about closing been different if response-time was measured more accurately? To put it another way, what is the margin of error that could exist in measurements and the same conclusions would still be reached?

Of course, property and lives were at stake. The lesson here, is there is a huge onus on the researcher to not only justify each assumption, but also to measure the impact of getting the assumption wrong. When a researcher knows something is wrong, they need to find a way to measure how wrong it is, and the cost or risks of it being too wrong. And to make sure this is transparent to the policy decision-maker.

Policy Stakeholders and Domain Experts May Not Serve As A Check

With that criticism in mind, I found that many analysts (in RAND and industry) do have systems in place to address the fact that "all-models are wrong." One feature of such systems frequently includes respecting the knowledge of domain expertise and addressing stakeholders' (managers, customers, or parties effected by a policy) concerns head on. That is, analysis isn't done in a vacuum, away from those who have worked in the field or have some skin in the game.

In my research, this meant with technical experts who had worked Water Agencies or the L.A. Department of Health. It also meant presenting to stakeholder groups with less technical expertise but often with some decision-making capacity. I was always humbled by the knowledge of people in these roles and enjoyed the opportunity to learn from them. In fact, these experiences helped form one of my fundamental life philosophies: Every one is expert in something; find that thing and learn from it.

The more of their knowledge I could take in, the more I could to to ensure my models and analysis matched their world view. If I was making an assumption, I could learn whether it was assumption they would be comfortable with. If it was an assumption that didn't ring true, I could revisit it. Additionally, we would confirm that the results of analysis matched the intuition of stakeholders. If a model had a result that did not, it was on us to find the cause.

Obviously, we had a responsibility to show the right answer, even if it didn't match stakeholder intuition. However, we also new not to underestimate the expertise of these stakeholders; the fact that a model that doesn't match intuition could be a clue that something was wrong.

his puts a lot of onus on the analyst. It's not enough to do the mathematical modeling and statistics correctly. The analysts also need communicate with the stakeholders, interpret their feedback, and incorporate it in the analysis. I had the privilege of working with some researchers at RAND who I believe were truly experts in this (particularly Gery Ryan). I also believe that RAND researchers of the time took this seriously. They spent time with some firemen and even spent a few nights in the firehouse (though, Flood suggests that these outings may not have been very fruitful). Most importantly, they had an audience and line of access to Fire Marshall O'Hagan.

Obviously, we had a responsibility to show the right answer, even if it didn't match stakeholder intuition. However, we also new not to underestimate the expertise of these stakeholders; the fact that a model that doesn't match intuition could be a clue that something was wrong.

his puts a lot of onus on the analyst. It's not enough to do the mathematical modeling and statistics correctly. The analysts also need communicate with the stakeholders, interpret their feedback, and incorporate it in the analysis. I had the privilege of working with some researchers at RAND who I believe were truly experts in this (particularly Gery Ryan). I also believe that RAND researchers of the time took this seriously. They spent time with some firemen and even spent a few nights in the firehouse (though, Flood suggests that these outings may not have been very fruitful). Most importantly, they had an audience and line of access to Fire Marshall O'Hagan.

However, this book reminded that there is another side to this stakeholder relationship. These experts are humans, and they exist in some complex policy environment. They may have limitations to their own knowledge, their own biases, and they may respond some external incentives. I am not all suggesting that they are not honestly imparting knowledge, but that their knowledge comes filtered through their own experiences. For example, O'Hagan both had political connections with some of the wealthier folks (like judges, who may not want the fire station on their corner shut down) and an intrinsic interest in high profile, complex buildings, like skyscrapers. So he may have been more inclined to let the Tiebout Sorting assumption stand than perhaps he should have been. The biases of the researchers and the stakeholders were correlated.

While I fully believe stakeholder consultation is a necessary and valuable check on an analysis, this is stark reminder that it's not sufficient. Analysts should consider the objectives of their stakeholders, and pushback when they are are not aligned with the ultimate policy objective. Additionally, analysts should take an active role in identifying and cultivating well-educated stakeholders, who can reasonably assess, check, and criticize an analyst's work.

While I fully believe stakeholder consultation is a necessary and valuable check on an analysis, this is stark reminder that it's not sufficient. Analysts should consider the objectives of their stakeholders, and pushback when they are are not aligned with the ultimate policy objective. Additionally, analysts should take an active role in identifying and cultivating well-educated stakeholders, who can reasonably assess, check, and criticize an analyst's work.

Data and Science Provide and Illusion of Efficiency

Flood describes O'Hagan as obsessed with proving that he could fight fires as efficiently as possible, and was happy to remove fires-tations with little marginal value. The RAND model, as described in the book, could identify those stations. Now, this is fine if there is high confidence in the model, but if there is some risk that is wrong, the extra fire-stations could serve as a reasonable hedge . Fires are the type of risk that you probably want to plan for a worst case-scenrio, not an average scenario. Hedging can make a lot sense.

So I started asking myself: "were fire stations eliminated because the city had a false-sense of confidence they could do so with scientific efficiency, while other other government services had less severe cuts because there was no model?"

To put it into nerd-speak, were the fire-models a local optimum for how fire-stations, but there may have been a broader global optimum for the city budget as a whole?

I don't exactly have an answer to this, but suspect there are harms from scientific efficiency being applied to one sector of government or business, but not others. Part of the onus needs to be on decision-makers taking a global, not local view of policy decisions. But I also suspect analysts can frame their work appropriately, by making comparisons outside their own research area, finding a broad set of constituents, challenging stakeholders to think globally.

* Hey RAND, or anyone else really, if you have an resources to point me to, I would love to dive in.

** For a final time, I will make the point that I am not actually stating whether the RAND researchers followed these lessons or not. I am stating that Flood's narrative reminds me of the importance of these lessons.

**** As Flood points out, "fire-fighters" is the term generally used today, but back then they were both called Firemen, and actually were all men. I use his terminology.

No comments:

Post a Comment